Resolution is the smallest variation that can be detected by a measuring instrument. For example, a measuring tape with markings every 1 cm has a resolution of 1 cm — meaning it registers changes in 1 cm increments.

Resolution represents the smallest measurable increment: it is the

minimum difference that can produce a detectable change in the reading.

If you try to measure the diameter of a keyhole using a measuring tape,

you will get the same result every time — even after 100 repetitions — because

the resolution of the tape is not sufficient to detect such small variations.

Exemples:

· If the instrument measures in 1-unit steps, any value between 6.5 and 7.5 will be recorded as 7.

·

If it measures in

2-unit steps, values between 7 and 9 will be recorded as 8.

🔎 Measurement Uncertainty

In a previous post (Type B Evaluation of Measurement Uncertainty),

we explained that a measurement should be reported as:

(X

± ΔX) unit

Where:

·

X is the best estimate of the measured value;

·

ΔX is the associated

uncertainty.

This means that if the measurement is repeated under the same

conditions, the result is expected to fall within the range:

(X - ΔX) to (X + ΔX)

To evaluate the uncertainty of a single measurement, it is

essential to consider the resolution of the instrument used.

👶 Example: length of a newborn

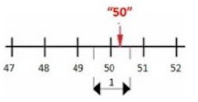

Suppose you know only that a newborn measured 50 cm in length. That

information alone is not enough to estimate the uncertainty.

However, if you also know that the measurement was taken using a ruler

graduated in centimeters, you can state that the actual length was between 49.5

cm and 50.5 cm. The result should be expressed as:

(50.0 ± 0.5) cm

In this case, the uncertainty arises solely from the instrument’s

limitation, assuming it is properly calibrated. Because only one

measurement was taken, the uncertainty is not statistical and is

classified as Type B.

⚖️ Example: mass of the

newborn

Now suppose the scale used has divisions every 10 g (0.01 kg). If the

reading is 3.54 kg, the actual mass is between 3.535 kg and 3.545 kg.

This measurement should be written as:

(3.54 ± 0.005) kg

📐 Absolute and Relative Uncertainty

In the expression X ± ΔX, the term ΔX is called the absolute

uncertainty (formerly known as the absolute error).

Relative uncertainty tells us how significant the absolute uncertainty is compared to the measured value. It is calculated as:

🚗 Example: speedometer reading

Let’s say a car speedometer is marked in increments of 2 km/h. If you

read 60 km/h:

·

The absolute

uncertainty is half the smallest division: 1 km/h

·

The relative uncertainty is:

Relative uncertainty is dimensionless, because the units cancel out. This makes it especially useful for

comparing the precision of different physical quantities.

📊 Example: mass or length?

Back to the newborn: which measurement had greater uncertainty?

Length:

Mass:

➡️ The length measurement had greater relative uncertainty.

These definitions apply to Type B uncertainty, but are equally

valid in the context of Type A uncertainty, which is based on

statistical analysis.

✏️ Practice

Exercises

1. You measured a child’s height with a ruler graduated in centimeters and

got 80 cm. What is the uncertainty?

2.

You measured a child’s

temperature with a thermometer marked in 2 °C increments and got 38 °C. What is

the uncertainty?

✅ Answers

1. Height = (80

± 0.5) cm

Relative uncertainty = (0.5 / 80) × 100 = 0.625%

2. Temperature

= (38 ± 1) °C

Relative uncertainty = (1 / 38) × 100 = 2.63%